ARP Issues in Large-Scale Clustered Applications over VxLAN

This article explores how scaling a clustered application with an all-to-all communication pattern caused a surge in ARP traffic over VxLAN-EVPN, resulting in network issues. It details the diagnosis process and the solution implemented.

Ori Acoca

3/18/2025

Note: This article assumes VxLAN knowledge.

Observing the Issue

While scaling out a k8s-based clustered application spanning over 500 servers, where each node communicates with every other node, we observed instability, degraded performance, and a high error rate. Logs and metrics pointed to network-related issues, including retransmissions and disconnections.

Initial Troubleshooting:

Ping test from source to destination server:

To isolate the issue, we tested network connectivity between nodes independently of the application using a simple ping test:

• Same Rack (Same Leaf Switch): No packet loss.

• Different Racks (Separate Leaf Switches): Significant packet loss.

Packet capture on source and destination servers:

To investigate further, we performed simultaneous packet captures on the source and destination servers to trace ICMP echo requests and replies.

Key Findings:

ICMP Response Consistency: ICMP requests were always answered when they reached the destination server.

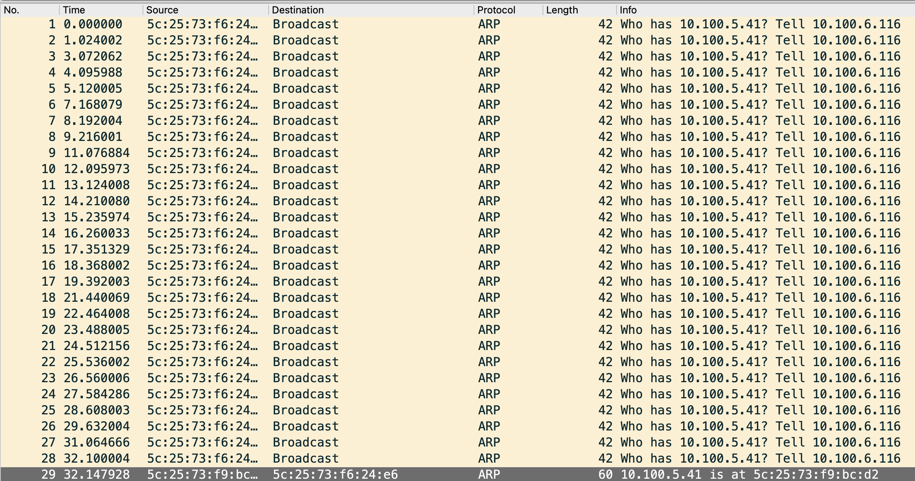

Intermittent ARP Issues: The source server periodically stopped sending ICMP requests and instead sent ARP requests to resolve the destination server’s MAC address. Most ARP requests went unanswered, but approximately every 30th request (after 30 seconds) received a reply, restoring ICMP communication. This delay aligned with the observed packet loss.

This behavior was consistent across all servers, confirming an ARP-related issue.

Understanding ARP handling in VxLAN-EVPN

In a VxLAN-based network with an EVPN control plane, MAC+IP addresses of servers are announced via EVPN to leaf switches.

Given the size of broadcast domain a VxLAN network can scale to, VxLAN reduces ARP broadcasts using ARP suppression mechanisms.

With ARP suppression enabled, leaf switches respond to ARP requests received from local attached servers on behalf of remote servers, based on information learned from the EVPN control plane. This prevents ARP requests from being broadcast to all other leaf switches and servers.

Further Investigation

Packet capture on source server and leaf:

To confirm whether ARP suppression was causing the issue, we captured ARP traffic on both the source server and the related interface VLAN (SVI) on the local leaf switch simultaneously. Our expectation was that every ARP reply leaving the server would also be visible on the switch’s SVI.

However, we observed that:

Only a small number of ARP requests leaving the server were seen on the switch’s SVI, leading to ARP resolution failures.

Whenever an ARP request reached the switch’s SVI, a reply sent back as expected.

Disabling ARP Suppression:

To rule out ARP suppression as the root cause, we temporarily disabled it:

"nv set nve vxlan arp-nd-suppress off"

"nv config apply -y"

Once ARP suppression was disabled, the issue disappeared.

Servers began communicating smoothly, and ARP requests received immediate responses.

However, this resulted in a significant increase in ARP traffic across the data center, which was undesirable at our scale.

Why Was ARP Suppression Failing?

At first glance, this could be seen as an unexpected behavior of ARP suppression or a possible bug.

However, understanding switch internals explains why this occurred.

Switch Architecture:

Switches can be divided into two key functional areas:

Control Plane: Manages data flow, routing, and processing. Includes switch’s CPU and OS, which run upper-layer protocols (BGP, OSPF, LACP, ARP, etc.).

Data Plane: Handles actual packet forwarding based on control plane information. Handled in HW by the switch’s ASIC (silicon chip).

ARP Processing Overload

Since ARP requests are broadcast, they are sent to all devices, including the switch. In our case, leaf switches responded to ARP requests on behalf of remote servers. However, in an all-to-all clustered application with thousands of nodes, such volume of ARPs may overloaded the switch’s CPU, preventing other protocols from functioning.

Control Plane Policing (CoPP)

To prevent control plane overload, switches implement Control Plane Policing (CoPP)—a mechanism that limits the number of control packets (such as ARP) escalated from the data plane to the switch CPU.

In our case, the number of ARP requests per second exceeded the CoPP-defined limit for ARP processing.

As a result, many ARP requests were dropped, causing the failures we observed.

Identifying ARP Drops

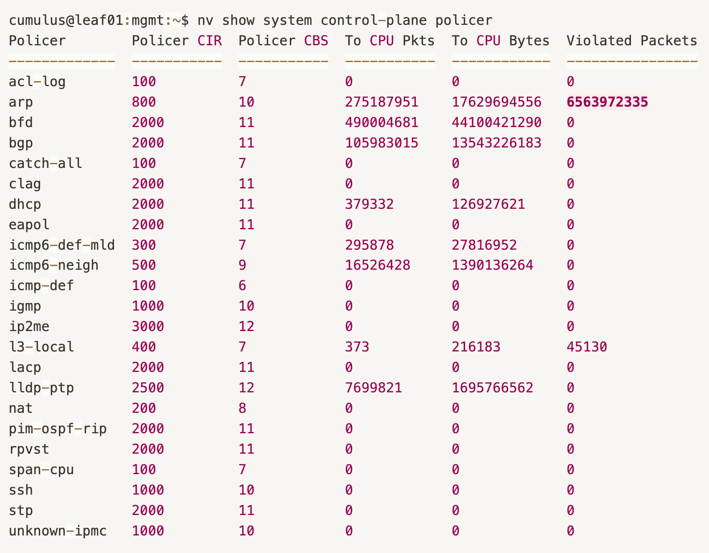

In NVIDIA Cumulus switches, the defined trap groups and their limits can be checked using:

"nv show system control-plane policer"

This revealed a high number of Violated Packets for ARP -

over 6,563,972,335 ARP packets were dropped only on that single leaf switch, explaining why ARP resolution was failing -

Solution: Adjusting ARP Policer Limits

To resolve the issue, we increased the ARP policer limit for the ARP trap group, allowing more ARP packets to be processed without being dropped:

"nv set system control-plane policer arp burst 4000"

"nv set system control-plane policer arp rate 4000"

"nv conf apply -y"

Note:

Before making this change, we verified that sufficient CPU resources were available by monitoring the switch’s CPU utilization.

It’s important to remember that trap groups exist for a reason. While their limits can be adjusted, any changes should be made cautiously.